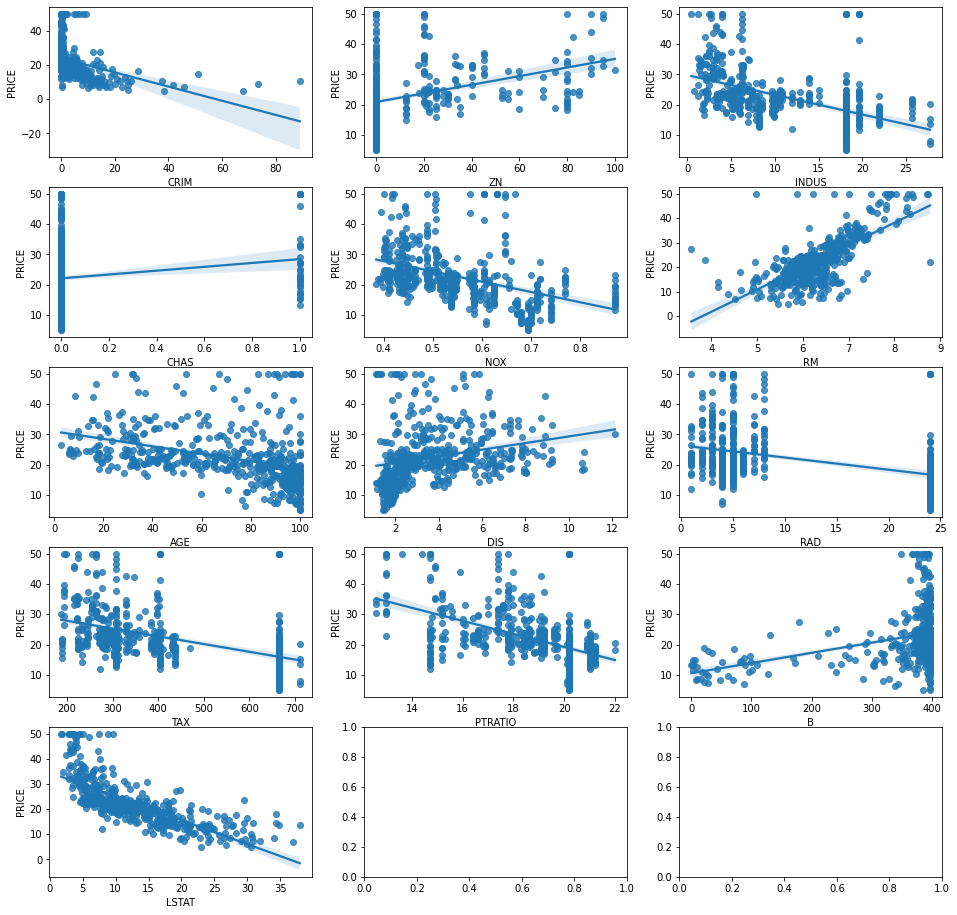

01 [선형 회귀 분석 + 산점도/선형 회귀 그래프]

환경에 따른 주택 가격 예측하기

!pip install sklearn

Looking in indexes: https://pypi.org/simple, https://us-python.pkg.dev/colab-wheels/public/simple/

Collecting sklearn

Downloading sklearn-0.0.post1.tar.gz (3.6 kB)

Preparing metadata (setup.py) ... done

Building wheels for collected packages: sklearn

Building wheel for sklearn (setup.py) ... done

Created wheel for sklearn: filename=sklearn-0.0.post1-py3-none-any.whl size=2344 sha256=e24eb5c956a7392f04815bf5c56a58bed831c50349cf4ae9d09bafe39fab3095

Stored in directory: /root/.cache/pip/wheels/14/25/f7/1cc0956978ae479e75140219088deb7a36f60459df242b1a72

Successfully built sklearn

Installing collected packages: sklearn

Successfully installed sklearn-0.0.post1

import numpy as np

import pandas as pd

from sklearn.datasets import load_boston

boston = load_boston()

/usr/local/lib/python3.8/dist-packages/sklearn/utils/deprecation.py:87: FutureWarning: Function load_boston is deprecated; `load_boston` is deprecated in 1.0 and will be removed in 1.2.

The Boston housing prices dataset has an ethical problem. You can refer to

the documentation of this function for further details.

The scikit-learn maintainers therefore strongly discourage the use of this

dataset unless the purpose of the code is to study and educate about

ethical issues in data science and machine learning.

In this special case, you can fetch the dataset from the original

source::

import pandas as pd

import numpy as np

data_url = "http://lib.stat.cmu.edu/datasets/boston"

raw_df = pd.read_csv(data_url, sep="\s+", skiprows=22, header=None)

data = np.hstack([raw_df.values[::2, :], raw_df.values[1::2, :2]])

target = raw_df.values[1::2, 2]

Alternative datasets include the California housing dataset (i.e.

:func:`~sklearn.datasets.fetch_california_housing`) and the Ames housing

dataset. You can load the datasets as follows::

from sklearn.datasets import fetch_california_housing

housing = fetch_california_housing()

for the California housing dataset and::

from sklearn.datasets import fetch_openml

housing = fetch_openml(name="house_prices", as_frame=True)

for the Ames housing dataset.

warnings.warn(msg, category=FutureWarning)

print(boston.DESCR)

.. _boston_dataset:

Boston house prices dataset

---------------------------

**Data Set Characteristics:**

:Number of Instances: 506

:Number of Attributes: 13 numeric/categorical predictive. Median Value (attribute 14) is usually the target.

:Attribute Information (in order):

- CRIM per capita crime rate by town

- ZN proportion of residential land zoned for lots over 25,000 sq.ft.

- INDUS proportion of non-retail business acres per town

- CHAS Charles River dummy variable (= 1 if tract bounds river; 0 otherwise)

- NOX nitric oxides concentration (parts per 10 million)

- RM average number of rooms per dwelling

- AGE proportion of owner-occupied units built prior to 1940

- DIS weighted distances to five Boston employment centres

- RAD index of accessibility to radial highways

- TAX full-value property-tax rate per $10,000

- PTRATIO pupil-teacher ratio by town

- B 1000(Bk - 0.63)^2 where Bk is the proportion of black people by town

- LSTAT % lower status of the population

- MEDV Median value of owner-occupied homes in $1000's

:Missing Attribute Values: None

:Creator: Harrison, D. and Rubinfeld, D.L.

This is a copy of UCI ML housing dataset.

https://archive.ics.uci.edu/ml/machine-learning-databases/housing/

This dataset was taken from the StatLib library which is maintained at Carnegie Mellon University.

The Boston house-price data of Harrison, D. and Rubinfeld, D.L. 'Hedonic

prices and the demand for clean air', J. Environ. Economics & Management,

vol.5, 81-102, 1978. Used in Belsley, Kuh & Welsch, 'Regression diagnostics

...', Wiley, 1980. N.B. Various transformations are used in the table on

pages 244-261 of the latter.

The Boston house-price data has been used in many machine learning papers that address regression

problems.

.. topic:: References

- Belsley, Kuh & Welsch, 'Regression diagnostics: Identifying Influential Data and Sources of Collinearity', Wiley, 1980. 244-261.

- Quinlan,R. (1993). Combining Instance-Based and Model-Based Learning. In Proceedings on the Tenth International Conference of Machine Learning, 236-243, University of Massachusetts, Amherst. Morgan Kaufmann.boston_df = pd.DataFrame(boston.data, columns = boston.feature_names)

boston_df.head()

boston_df['PRICE'] = boston.target

boston_df.head()

print('보스톤 주택 가격 데이터셋 크기:', boston_df.shape)

보스톤 주택 가격 데이터셋 크기: (506, 14)

boston_df.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 506 entries, 0 to 505

Data columns (total 14 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 CRIM 506 non-null float64

1 ZN 506 non-null float64

2 INDUS 506 non-null float64

3 CHAS 506 non-null float64

4 NOX 506 non-null float64

5 RM 506 non-null float64

6 AGE 506 non-null float64

7 DIS 506 non-null float64

8 RAD 506 non-null float64

9 TAX 506 non-null float64

10 PTRATIO 506 non-null float64

11 B 506 non-null float64

12 LSTAT 506 non-null float64

13 PRICE 506 non-null float64

dtypes: float64(14)

memory usage: 55.5 KB분석 모델 구축, 결과 분석 및 시각화

from sklearn.linear_model import LinearRegression

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error, r2_score

#X, Y 분할하기

Y = boston_df['PRICE']

X = boston_df.drop(['PRICE'], axis = 1, inplace = False)

#훈련용 데이터와 평가용 데이터 분할하기

X_train, X_test, Y_train, Y_test = train_test_split(X, Y, test_size = 0.3, random_state = 156)

#선형 회귀 분석 : 모델 생성

lr = LinearRegression()

#선형 회귀 분석 : 모델 훈련

lr.fit(X_train, Y_train)

LinearRegression()

#선형 회귀 분석 : 평가 데이터에 대한 예측 수행 -> 예측 결과 Y_predict 구하기

Y_predict = lr.predict(X_test)

mse = mean_squared_error(Y_test, Y_predict)

rmse = np.sqrt(mse)

print('MSE : {0:.3f}, RMSE : {1:.3f}'.format(mse, rmse))

print('R^2(Variance score) : {0:.3f}'.format(r2_score(Y_test, Y_predict)))

MSE : 17.297, RMSE : 4.159

R^2(Variance score) : 0.757

print('Y 절편 값:', lr.intercept_)

print('회귀 계수 값:', np.round(lr.coef_, 1))

Y 절편 값: 40.99559517216477

회귀 계수 값: [ -0.1 0.1 0. 3. -19.8 3.4 0. -1.7 0.4 -0. -0.9 0.

-0.6]

coef = pd.Series(data = np.round(lr.coef_, 2), index = X.columns)

coef.sort_values(ascending = False)

RM 3.35

CHAS 3.05

RAD 0.36

ZN 0.07

INDUS 0.03

AGE 0.01

B 0.01

TAX -0.01

CRIM -0.11

LSTAT -0.57

PTRATIO -0.92

DIS -1.74

NOX -19.80

dtype: float64회귀 분석 결과를 산점도 + 선형 회귀 그래프로 시각화하기

import matplotlib.pyplot as plt

import seaborn as sns

fig, axs = plt.subplots(figsize = (16, 16), ncols = 3, nrows = 5)

x_features = ['CRIM', 'ZN', 'INDUS', 'CHAS', 'NOX', 'RM', 'AGE', 'DIS', 'RAD', 'TAX', 'PTRATIO', 'B', 'LSTAT']

for i, feature in enumerate(x_features):

row = int(i/3)

col = i%3

sns.regplot(x = feature, y = 'PRICE', data = boston_df, ax = axs[row][col])

02 [회귀 분석 + 산점도/선형 회귀 그래프]

항목에 따른 자동차 연비 예측하기

import numpy as np

import pandas as pd

data_df = pd.read_csv('auto-mpg.csv', header = 0, engine = 'python')

print('데이터셋 크기: ', data_df.shape)

data_df.head()

data_df = data_df.drop(['car_name', 'origin', 'horsepower'], axis = 1, inplace = False)

data_df.head()

print('데이터셋 크기: ', data_df.shape)

데이터셋 크기: (398, 6)

data_df.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 398 entries, 0 to 397

Data columns (total 6 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 mpg 398 non-null float64

1 cylinders 398 non-null int64

2 displacement 398 non-null float64

3 weight 398 non-null int64

4 acceleration 398 non-null float64

5 model_year 398 non-null int64

dtypes: float64(3), int64(3)

memory usage: 18.8 KB분석 모델 구축, 결과 분석 및 시각화

from sklearn.linear_model import LinearRegression

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error, r2_score

#X, Y 분할하기

Y = data_df['mpg']

X = data_df.drop(['mpg'], axis = 1, inplace = False)

#훈련용 데이터와 평가용 데이터 분할하기

X_train, X_test, Y_train, Y_test = train_test_split(X, Y, test_size = 0.3, random_state = 0)

#선형 회귀 분석: 모델 생성

lr = LinearRegression()

#선형 회귀 분석: 모델 훈련

lr.fit(X_train, Y_train)

LinearRegression()

#선형 회귀 분석: 평가 데이터에 대한 예측 수행 -> 예측 결과 Y_predict 구하기

Y_predict = lr.predict(X_test)

mse = mean_squared_error(Y_test, Y_predict)

rmse = np.sqrt(mse)

print('MSE : {0:.3f}, RMSE : {1:.3f}'.format(mse, rmse))

print('R^2(Variance score) : {0:.3f}'.format(r2_score(Y_test, Y_predict)))

MSE : 12.278, RMSE : 3.504

R^2(Variance score) : 0.808

print('Y 절편 값:', np.round(lr.intercept_, 2))

print('회귀 계수 값:', np.round(lr.coef_, 2))

Y 절편 값: -17.55

회귀 계수 값: [-0.14 0.01 -0.01 0.2 0.76]

coef = pd.Series(data = np.round(lr.coef_, 2), index = X.columns)

coef.sort_values(ascending = False)

model_year 0.76

acceleration 0.20

displacement 0.01

weight -0.01

cylinders -0.14

dtype: float64회귀 분석 결과를 산점도 + 선형 회귀 그래프로 시각화하기

import matplotlib.pyplot as plt

import seaborn as sn

fig, axs = plt.subplots(figsize = (16, 16), ncols = 3, nrows = 2)

x_features = ['model_year', 'acceleration', 'displacement', 'weight', 'cylinders']

plot_color = ['r', 'b', 'y', 'g', 'r']

for i, feature in enumerate(x_features):

row = int(i/3)

col = i%3

sns.regplot(x = feature, y = 'mpg', data = data_df, ax = axs[row][col], color = plot_color[i])

print("연비를 예측하고 싶은 차의 정보를 입력해주세요.")

cylinders_1 = int(input("cylinders : "))

displacement_1 = int(input("displacement : "))

weight_1 = int(input("weight : "))

acceleration_1 = int(input("accleration : "))

model_year_1 = int(input("model_year : "))

연비를 예측하고 싶은 차의 정보를 입력해주세요.

cylinders : 8

displacement : 350

weight : 3200

accleration : 22

model_year : 99

mpg_predict = lr.predict([[cylinders_1, displacement_1, weight_1, acceleration_1, model_year_1]])

/usr/local/lib/python3.8/dist-packages/sklearn/base.py:450: UserWarning: X does not have valid feature names, but LinearRegression was fitted with feature names

warnings.warn(

print("이 자동차의 예상 연비(MPG)는 %.2f입니다." %mpg_predict)

이 자동차의 예상 연비(MPG)는 41.32입니다.'Python > 데이터 과학 기반의 파이썬 빅데이터 분석(한빛 아카데미)' 카테고리의 다른 글

| 데이터 과학 기반의 파이썬 빅데이터 분석 Chapter12 군집분석 (0) | 2023.01.10 |

|---|---|

| 데이터 과학 기반의 파이썬 빅데이터 분석 Chapter11 분류 분석 (0) | 2023.01.10 |

| 데이터 과학 기반의 파이썬 빅데이터 분석 Chapter09 지리 정보 분석 (0) | 2023.01.09 |

| 데이터 과학 기반의 파이썬 빅데이터 분석 Chapter08 텍스트 빈도 분석 (0) | 2023.01.08 |

| 데이터 과학 기반의 파이썬 빅데이터 분석 Chapter07 통계분석 (0) | 2023.01.08 |