New York City Airbnb Open Data

Airbnb listings and metrics in NYC, NY, USA (2019)

www.kaggle.com

Step 1. 데이터셋 준비하기

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as snsColab Notebook에 Kaggle API 세팅하기

import os

# os.environ을 이용하여 Kaggle API Username, Key 세팅하기

os.environ['KAGGLE_USERNAME'] = 'jhighllight'

os.environ['KAGGLE_KEY'] = 'xxxxxxxxxxxxxxxxxxxxx'데이터 다운로드 및 압축 해제하기

# Linux 명령어로 Kaggle API를 이용하여 데이터셋 다운로드하기 (!kaggle ~)

# Linux 명령어로 압축 해제하기

!rm *.*

!kaggle datasets download -d dgomonov/new-york-city-airbnb-open-data

!unzip '*.zip'Downloading new-york-city-airbnb-open-data.zip to /content

0% 0.00/2.44M [00:00 <?,? B/s]

100% 2.44M/2.44M [00:00 <00:00, 163MB/s]

Archive: new-york-city-airbnb-open-data.zip

inflating: AB_NYC_2019.csv

inflating: New_York_City_. png

Pandas 라이브러리로 csv파일 읽어 들이기

df = pd.read_csv('/content/AB_NYC_2019.csv')Step 2. EDA 및 데이터 기초 통계 분석

불필요한 데이터 데이터프레임에서 제거하기

# DataFrame에서 제공하는 메소드를 이용하여 각 데이터프레임의 구조 분석하기 (head(), info(), describe())

# 데이터프레임에서 불필요한 컬럼 제거하기

df.head()

df['room_type'].value_counts()Entire home/apt 25409

Private room 22326

Shared room 1160

Name: room_type, dtype: int64

df.info()<class 'pandas.core.frame.DataFrame'>

RangeIndex: 48895 entries, 0 to 48894

Data columns (total 16 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 id 48895 non-null int64

1 name 48879 non-null object

2 host_id 48895 non-null int64

3 host_name 48874 non-null object

4 neighbourhood_group 48895 non-null object

5 neighbourhood 48895 non-null object

6 latitude 48895 non-null float64

7 longitude 48895 non-null float64

8 room_type 48895 non-null object

9 price 48895 non-null int64

10 minimum_nights 48895 non-null int64

11 number_of_reviews 48895 non-null int64

12 last_review 38843 non-null object

13 reviews_per_month 38843 non-null float64

14 calculated_host_listings_count 48895 non-null int64

15 availability_365 48895 non-null int64

dtypes: float64(3), int64(7), object(6)

memory usage: 6.0+ MB

df.isna().sum()id 0

name 16

host_id 0

host_name 21

neighbourhood_group 0

neighbourhood 0

latitude 0

longitude 0

room_type 0

price 0

minimum_nights 0

number_of_reviews 0

last_review 10052

reviews_per_month 10052

calculated_host_listings_count 0

availability_365 0

dtype: int64

(df['reviews_per_month'].isna() & df['last_review'].isna()).sum()10052

df['reviews_per_month'].isna().sum()10052

df['availability_365'].hist()<Axes: >

(df['availability_365'] == 0).sum()17533

df.describe()

df.columnsIndex(['id', 'name', 'host_id', 'host_name', 'neighbourhood_group',

'neighbourhood', 'latitude', 'longitude', 'room_type', 'price',

'minimum_nights', 'number_of_reviews', 'last_review',

'reviews_per_month', 'calculated_host_listings_count',

'availability_365'],

dtype='object')

Index(['id', 'name', 'host_id', 'host_name', 'neighbourhood_group',

'neighbourhood', 'latitude', 'longitude', 'room_type', 'price',

'minimum_nights', 'number_of_reviews', 'last_review',

'reviews_per_month', 'calculated_host_listings_count',

'availability_365'],

dtype='object')

df.drop(['id', 'name', 'host_name', 'latitude', 'longitude'], axis=1, inplace=True)

df.head()

수치형 데이터와 Price의 Jointplot 분석하기

df.columnsIndex(['host_id', 'neighbourhood_group', 'neighbourhood', 'room_type', 'price',

'minimum_nights', 'number_of_reviews', 'last_review',

'reviews_per_month', 'calculated_host_listings_count',

'availability_365'],

dtype='object')

sns.jointplot(x='host_id', y='price', data=df, kind='hex')<seaborn.axisgrid.JointGrid at 0x7 fbd56 a42 dc0>

sns.jointplot(x='reviews_per_month', y='price', data=df, kind='hex')<seaborn.axisgrid.JointGrid at 0x7 fbd5684 db50>

수치형 데이터와 Price의 상관성 분석하기

sns.heatmap(df.corr(), annot=True, cmap='YlOrRd')<Axes: >

범주형 데이터와 Price의 Boxplot 계열 및 Histogram 분석하기

df.columnsIndex(['host_id', 'neighbourhood_group', 'neighbourhood', 'room_type', 'price',

'minimum_nights', 'number_of_reviews', 'last_review',

'reviews_per_month', 'calculated_host_listings_count',

'availability_365'],

dtype='object')

sns.boxplot(x='neighbourhood_group', y='price', data=df)<Axes: xlabel='neighbourhood_group', ylabel='price'>

sns.boxplot(x='room_type', y='price', data=df)<Axes: xlabel='room_type', ylabel='price'>

Step 3. 데이터 클리닝 수행하기

미기입, 오기입 데이터 확인하기

df.columnsIndex(['host_id', 'neighbourhood_group', 'neighbourhood', 'room_type', 'price',

'minimum_nights', 'number_of_reviews', 'last_review',

'reviews_per_month', 'calculated_host_listings_count',

'availability_365'],

dtype='object')

df.isna().sum()host_id 0

neighbourhood_group 0

neighbourhood 0

room_type 0

price 0

minimum_nights 0

number_of_reviews 0

last_review 10052

reviews_per_month 10052

calculated_host_listings_count 0

availability_365 0

dtype: int64

# 각 컬럼을 분석하여 미기입/오기입된 데이터 확인하기

# Hint) 수치형 데이터는 통계를 이용해서, 범주형 데이터는 unique(), value_counts()등으로 확인

df['neighbourhood_group'].value_counts()Manhattan 21661

Brooklyn 20104

Queens 5666

Bronx 1091

Staten Island 373

Name: neighbourhood_group, dtype: int64

neigh = df['neighbourhood'].value_counts()

plt.plot(range(len(neigh)), neigh)[<matplotlib.lines.Line2D at 0x7 fbd53 e400 a0>]

df['neighbourhood'] = df['neighbourhood'].apply(lambda s: s if str(s) not in neigh[50:] else 'others')

df['neighbourhood'].value_counts()others 6248

Williamsburg 3920

Bedford-Stuyvesant 3714

Harlem 2658

Bushwick 2465

Upper West Side 1971

Hell's Kitchen 1958

East Village 1853

Upper East Side 1798

Crown Heights 1564

Midtown 1545

East Harlem 1117

Greenpoint 1115

Chelsea 1113

Lower East Side 911

Astoria 900

Washington Heights 899

West Village 768

Financial District 744

Flatbush 621

Clinton Hill 572

Long Island City 537

Prospect-Lefferts Gardens 535

Park Slope 506

East Flatbush 500

Fort Greene 489

Murray Hill 485

Kips Bay 470

Flushing 426

Ridgewood 423

Greenwich Village 392

Sunset Park 390

Chinatown 368

Sunnyside 363

SoHo 358

Prospect Heights 357

Morningside Heights 346

Gramercy 338

Ditmars Steinway 309

Theater District 288

South Slope 284

Nolita 253

Inwood 252

Gowanus 247

Elmhurst 237

Woodside 235

Carroll Gardens 233

Jamaica 231

East New York 218

Jackson Heights 186

East Elmhurst 185

Name: neighbourhood, dtype: int64

df['room_type'].value_counts()Entire home/apt 25409

Private room 22326

Shared room 1160

Name: room_type, dtype: int64

df.columnsIndex(['host_id', 'neighbourhood_group', 'neighbourhood', 'room_type', 'price',

'minimum_nights', 'number_of_reviews', 'last_review',

'reviews_per_month', 'calculated_host_listings_count',

'availability_365'],

dtype='object')

sns.rugplot(x='price', data=df, height=1)<Axes: xlabel='price'>

print(df['price'].quantile(0.95))

print(df['price'].quantile(0.005))355.0

26.0

sns.rugplot(x='minimum_nights', data=df, height=1)<Axes: xlabel='minimum_nights'>

print(df['minimum_nights'].quantile(0.98))30.0

sns.rugplot(x='availability_365', data=df, height=1)<Axes: xlabel='availability_365'>

print(df['availability_365'].quantile(0.3))0.0

아웃라이어를 제거하고 통계 재분석하기

# quantile(), drop() 등 메소드를 이용하여 outlier 제거하고 통계 재분석하기

p1 = df['price'].quantile(0.95)

p2 = df['price'].quantile(0.005)

print(p1, p2)355.0 26.0

df = df[(df['price'] < p1) & (df['price'] > p2)]

df['price'].hist()<Axes: >

mn1 = df['minimum_nights'].quantile(0.98)

print(mn1)30.0

df = df[df['minimum_nights'] < mn1]

df['minimum_nights'].hist()<Axes: >

df['is_avil_zero'] = df['availability_365'].apply(lambda x: 'Zero' if x==0 else 'Nonzero')미기입 데이터 처리하기

# fill(), dropna() 등으로 미기입된 데이터를 처리하기

df['review_exists'] = df['reviews_per_month'].isna().apply(lambda x: 'No' if x is True else 'Yes')

df.fillna(0, inplace=True)

df.isna().sum()

host_id 0

neighbourhood_group 0

neighbourhood 0

room_type 0

price 0

minimum_nights 0

number_of_reviews 0

last_review 0

reviews_per_month 0

calculated_host_listings_count 0

availability_365 0

is_avil_zero 0

review_exists 0

dtype: int64

Step 4. 모델 학습을 위한 데이터 전처리

get_dummies를 이용한 범주형 데이터 전처리

df.columnsIndex(['host_id', 'neighbourhood_group', 'neighbourhood', 'room_type', 'price', 'minimum_nights', 'number_of_reviews', 'last_review', 'reviews_per_month', 'calculated_host_listings_count', 'availability_365', 'is_avil_zero', 'review_exists'], dtype='object')

X_cat = df[['neighbourhood_group', 'neighbourhood', 'room_type', 'is_avil_zero', 'review_exists']]

X_cat = pd.get_dummies(X_cat)StandardScaler를 이용해 수치형 데이터 표준화하기

from sklearn.preprocessing import StandardScaler

df.columnsIndex(['host_id', 'neighbourhood_group', 'neighbourhood', 'room_type', 'price', 'minimum_nights', 'number_of_reviews', 'last_review', 'reviews_per_month', 'calculated_host_listings_count', 'availability_365', 'is_avil_zero', 'review_exists'], dtype='object')

# StandardScaler를 이용해 수치형 데이터를 표준화하기

scaler = StandardScaler()

X_num = df.drop(['neighbourhood_group', 'neighbourhood', 'room_type', 'price', 'last_review', 'is_avil_zero', 'review_exists'], axis=1)

scaler.fit(X_num)

X_scaled = scaler.transform(X_num)

X_scaled = pd.DataFrame(X_scaled, index=X_num.index, columns=X_num.columns)

X = pd.concat([X_scaled, X_cat], axis=1)

y = df['price']

X.head()

학습데이터와 테스트데이터 분리하기

from sklearn.model_selection import train_test_split

# train_test_split() 함수로 학습 데이터와 테스트 데이터 분리하기

X_train, X_test, y_train, y_test = train_test_split(X, y , test_size=0.3, random_state=1)Step 5. Regression 모델 학습하기

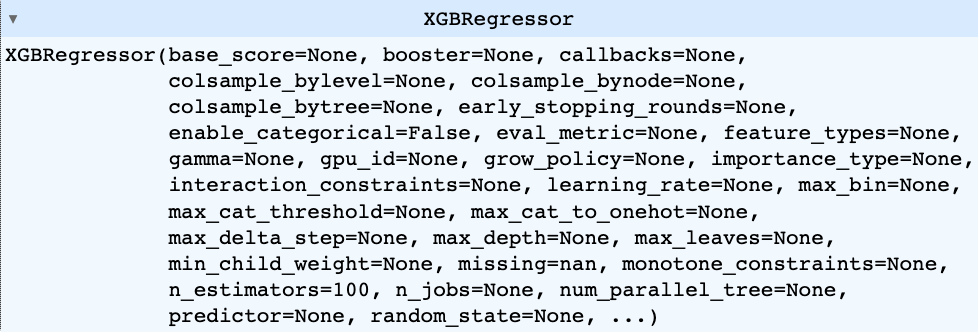

XGBoost Regression 모델 학습하기

from xgboost import XGBRegressor

# XGBRegressor 모델 생성/학습

model_reg = XGBRegressor()

model_reg.fit(X_train, y_train)

모델 학습 결과 평가하기

from sklearn.metrics import mean_absolute_error, mean_squared_error

from math import sqrt

# Predict를 수행하고 mean_absolute_error, rmse 결과 출력하기

pred = model_reg.predict(X_test)

print(mean_absolute_error(y_test, pred))

print(mean_absolute_error(y_test, pred))

print(sqrt(mean_squared_error(y_test, pred)))34.572650480520274

34.572650480520274

48.680226616285346

Step 6. 모델 학습 결과 심화 분석하기

실제 값과 추측 값의 Scatter plot 시각화하기

# y_test vs. pred Scatter 플랏으로 시각적으로 분석하기

plt.scatter(x=y_test, y=pred, alpha=0.1)

plt.plot([0,350], [0, 350], 'r-')[<matplotlib.lines.Line2D at 0x7 fbd3 e5693 d0>]

에러 값의 히스토그램 확인하기

# err의 히스토그램으로 에러율 히스토그램 확인하기

err = (pred - y_test) / y_test

sns.histplot(err)

plt.grid()

# err의 히스토그램으로 에러율 히스토그램 확인하기

err = pred - y_test

sns.histplot(err)

plt.grid()

'Python > Kaggle' 카테고리의 다른 글

| Video Game Sales with Ratings (0) | 2023.05.18 |

|---|---|

| [패캠] 우리나라의 행복지수는 몇 위? 아니, 행복지수가 도대체 뭔데? (0) | 2023.05.17 |

| Part2. Chapter 1 - 자동으로 모은 데이터는 분석하기 어렵다면서? 자동으로 모은 중고 자동차 데ᄋ.. (0) | 2023.03.26 |

| Part1. Chapter 05 - 미국의 대통령은 어떻게 뽑힐까 (0) | 2023.03.22 |

| Part1. Chapter 04 - 오늘 밤 유럽 축구, 어디가 이길까_ 데이터로 분석하고 내기르.. (0) | 2023.03.09 |