Step 1. 데이터셋 준비하기

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as snsColab Notebook에 Kaggle API 세팅하기

import os# os.environ을 이용하여 Kaggle API Username, Key 세팅하기

os.environ['KAGGLE_USERNAME'] = 'jhighllight'

os.environ['KAGGLE_KEY'] = 'xxxxxxxxxxxxxxxxxxxxxxx'데이터 다운로드 및 압축 해제하기

# Linux 명령어로 Kaggle API를 이용하여 데이터셋 다운로드하기 (!kaggle ~)

# Linux 명령어로 압축 해제하기

!kaggle datasets download -d hugomathien/soccer

!unzip '*.zip'

sqlite3와 Pandas로 sqlite 데이터베이스 읽어 들이기

import sqlite3# sqlite3.connect()와 pd.read_sql_query()로 csv파일 읽어들이기

conn = sqlite3.connect('/content/database.sqlite')

df_country = pd.read_sql_query('SELECT * from Country', conn)

df_league = pd.read_sql_query('SELECT * from League', conn)

df_match = pd.read_sql_query('SELECT * from Match', conn)

df_player = pd.read_sql_query('SELECT * from Player', conn)

df_player_att = pd.read_sql_query('SELECT * from Player_Attributes', conn)

df_team = pd.read_sql_query('SELECT * from Team', conn)

df_team_att = pd.read_sql_query('SELECT * from Team_Attributes', conn)Step 2. EDA 및 데이터 기초 통계 분석

각 데이터프레임의 구조 파악하기

# DataFrame에서 제공하는 메소드를 이용하여 각 데이터프레임의 구조 분석하기 (head(), info(), describe())

df_country.head()

df_country.info()

df_league.head()

df_league.info()

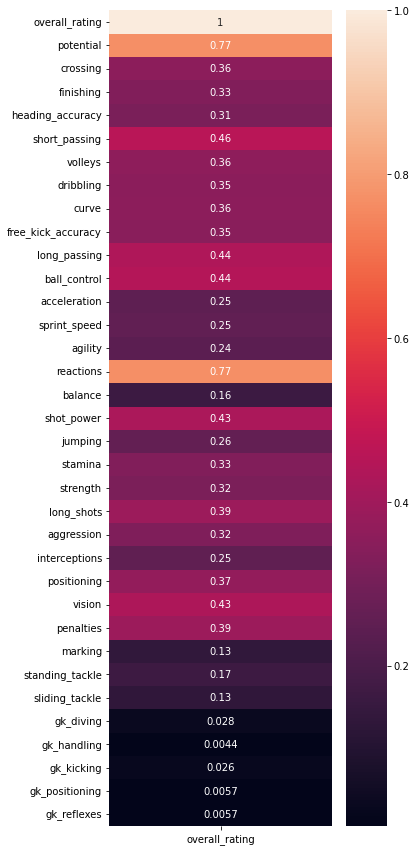

df_league['id'].unique()

df_league['country_id'].unique()

df_match.head()

for c in df_match.columns:

print(c)id country_id league_id season stage date match_api_id home_team_api_id away_team_api_id home_team_goal away_team_goal home_player_X1 home_player_X2 home_player_X3 home_player_X4 home_player_X5 home_player_X6 home_player_X7 home_player_X8 home_player_X9 home_player_X10 home_player_X11 away_player_X1 away_player_X2 away_player_X3 away_player_X4 away_player_X5 away_player_X6 away_player_X7 away_player_X8 away_player_X9 away_player_X10 away_player_X11 home_player_Y1 home_player_Y2 home_player_Y3 home_player_Y4 home_player_Y5 home_player_Y6 home_player_Y7 home_player_Y8 home_player_Y9 home_player_Y10 home_player_Y11 away_player_Y1 away_player_Y2 away_player_Y3 away_player_Y4 away_player_Y5 away_player_Y6 away_player_Y7 away_player_Y8 away_player_Y9 away_player_Y10 away_player_Y11 home_player_1 home_player_2 home_player_3 home_player_4 home_player_5 home_player_6 home_player_7 home_player_8 home_player_9 home_player_10 home_player_11 away_player_1 away_player_2 away_player_3 away_player_4 away_player_5 away_player_6 away_player_7 away_player_8 away_player_9 away_player_10 away_player_11 goal shoton shotoff foulcommit card cross corner possession B365H B365D B365A BWH BWD BWA IWH IWD IWA LBH LBD LBA PSH PSD PSA WHH WHD WHA SJH SJD SJA VCH VCD VCA GBH GBD GBA BSH BSD BSA

for c, num in zip(df_match.columns, df_match.isna().sum()):

print(c, num)id 0 country_id 0 league_id 0 season 0 stage 0 date 0 match_api_id 0 home_team_api_id 0 away_team_api_id 0 home_team_goal 0 away_team_goal 0 home_player_X1 1821 home_player_X2 1821 home_player_X3 1832 home_player_X4 1832 home_player_X5 1832 home_player_X6 1832 home_player_X7 1832 home_player_X8 1832 home_player_X9 1832 home_player_X10 1832 home_player_X11 1832 away_player_X1 1832 away_player_X2 1832 away_player_X3 1832 away_player_X4 1832 away_player_X5 1832 away_player_X6 1832 away_player_X7 1832 away_player_X8 1832 away_player_X9 1833 away_player_X10 1833 away_player_X11 1839 home_player_Y1 1821 home_player_Y2 1821 home_player_Y3 1832 home_player_Y4 1832 home_player_Y5 1832 home_player_Y6 1832 home_player_Y7 1832 home_player_Y8 1832 home_player_Y9 1832 home_player_Y10 1832 home_player_Y11 1832 away_player_Y1 1832 away_player_Y2 1832 away_player_Y3 1832 away_player_Y4 1832 away_player_Y5 1832 away_player_Y6 1832 away_player_Y7 1832 away_player_Y8 1832 away_player_Y9 1833 away_player_Y10 1833 away_player_Y11 1839 home_player_1 1224 home_player_2 1315 home_player_3 1281 home_player_4 1323 home_player_5 1316 home_player_6 1325 home_player_7 1227 home_player_8 1309 home_player_9 1273 home_player_10 1436 home_player_11 1555 away_player_1 1234 away_player_2 1278 away_player_3 1293 away_player_4 1321 away_player_5 1335 away_player_6 1313 away_player_7 1235 away_player_8 1341 away_player_9 1328 away_player_10 1441 away_player_11 1554 goal 11762 shoton 11762 shotoff 11762 foulcommit 11762 card 11762 cross 11762 corner 11762 possession 11762 B365H 3387 B365D 3387 B365A 3387 BWH 3404 BWD 3404 BWA 3404 IWH 3459 IWD 3459 IWA 3459 LBH 3423 LBD 3423 LBA 3423 PSH 14811 PSD 14811 PSA 14811 WHH 3408 WHD 3408 WHA 3408 SJH 8882 SJD 8882 SJA 8882 VCH 3411 VCD 3411 VCA 3411 GBH 11817 GBD 11817 GBA 11817 BSH 11818 BSD 11818 BSA 11818

df_match.drop(df_match.columns[-38:], axis=1, inplace=True)df_match.head()

df_player.head()

df_player_att.head()

df_team.head()

df_team_att.head()

데이터프레임간의 관계 파악하기

# 데이터프레임 간 중복되는 Column이 있는지 확인하고 유용한 Column 식별하기

df_player_att['player_api_id'].value_counts()

df_match['home_player_1'].dropna().apply(int).map(df_player_att.groupby('player_api_id').mean()['overall_rating']).isna().sum()0

df_team_att['team_api_id'].value_counts()

df_match['away_team_api_id'].map(df_team_att.groupby('team_api_id').mean()['buildUpPlaySpeed']).isna().sum()178

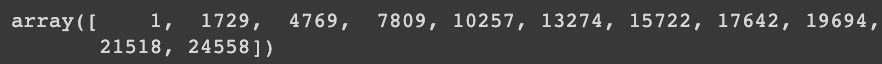

선수 특성 사이의 상관성 파악하기

# DataFrame의 corr() 메소드와 Seaborn의 heatmap() 메소드를 이용하여 Pearson's correlation 시각화하기

fig = plt.figure(figsize=(5, 15))

sns.heatmap(df_player_att.drop(['id', 'player_fifa_api_id', 'player_api_id'], axis=1).corr()[['overall_rating']], annot=True)<AxesSubplot:>

매치 데이터프레임에 팀 특성 데이터프레임 통합하기

# DataFrame의 map() 메소드를 활용하여 데이터프레임 통합하기

df_team_att.columns

df_team_att.drop('buildUpPlayDribbling', axis=1, inplace=True)df_team_att.columns

def most(x):

return x.value_counts().index[0]team_map = df_team_att.groupby('team_api_id').aggregate(

{

'buildUpPlaySpeed': 'mean',

'buildUpPlaySpeedClass': most,

'buildUpPlayDribblingClass': most,

'buildUpPlayPassing': 'mean',

'buildUpPlayPassingClass': most,

'buildUpPlayPositioningClass': most,

'chanceCreationPassing': 'mean',

'chanceCreationPassingClass': most,

'chanceCreationCrossing': 'mean',

'chanceCreationCrossingClass': most,

'chanceCreationShooting': 'mean',

'chanceCreationShootingClass': most,

'chanceCreationPositioningClass': most,

'defencePressure': 'mean',

'defencePressureClass': most,

'defenceAggression': 'mean',

'defenceAggressionClass': most,

'defenceTeamWidth': 'mean',

'defenceTeamWidthClass': most,

'defenceDefenderLineClass': most

}

)team_map

df = df_match[['home_team_goal', 'away_team_goal']].copy()for team in ['home_', 'away_']:

team_map.index.name = team + 'team_api_id'

for col in team_map.columns:

df[team + col] = df_match[team_map.index.name].map(team_map[col])df.dropna(inplace=True)df.head()

홈과 어웨이의 골 수를 승-무-패 범주로 변환하기

# 홈과 어웨이의 골 수를 범주형 데이터로 변환하기 (0: 홈팀 승, 1: 무승부, 2: 어웨이팀 승)

df['matchResult'] = df[['home_team_goal', 'away_team_goal']].aggregate(lambda x: 0 if x[0] > x[1] else 2, axis=1)df.drop(['home_team_goal', 'away_team_goal'], axis=1, inplace=True)Step 3. 모델 학습을 위한 데이터 전처리

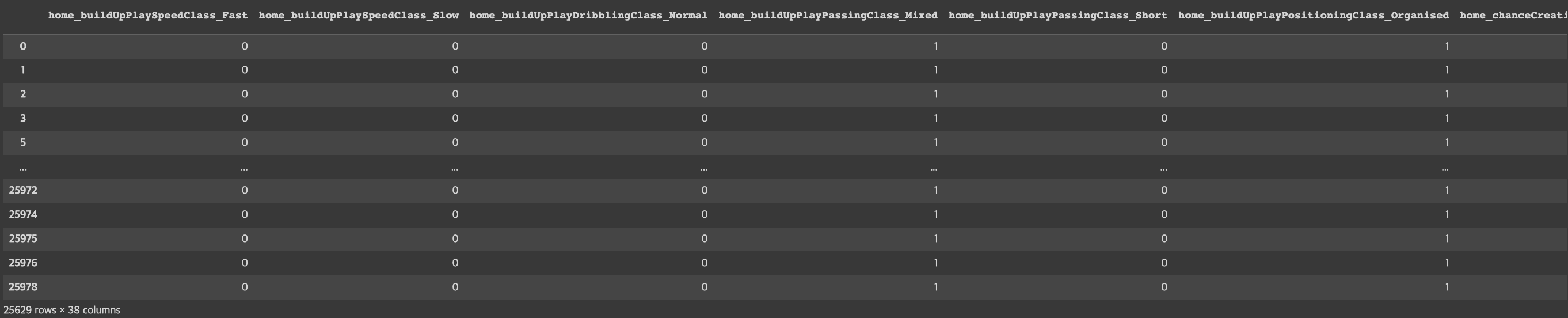

get_dummies를 이용하여 범주형 데이터 전처리하기

col_cats = list(filter(lambda s: s.find('Class') >= 0, df.columns))

df_cats = pd.get_dummies(df[col_cats], drop_first=True)

df_cats

StandardScaler를 이용해 수치형 데이터 표준화하기

from sklearn.preprocessing import StandardScaler# StandardScaler를 이용해 수치형 데이터를 표준화하기

X_num = df.drop(['matchResult'] + col_cats, axis=1)

scaler = StandardScaler()

scaler.fit(X_num)

X_scaled = scaler.transform(X_num)

X_scaled = pd.DataFrame(data=X_scaled, index=X_num.index, columns=X_num.columns)

X_cat = df_cats

X = pd.concat([X_scaled, X_cat], axis=1)

y = df['matchResult']학습데이터와 테스트데이터 분리하기

from sklearn.model_selection import train_test_split# train_test_split() 함수로 학습 데이터와 테스트 데이터 분리하기

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=1)Step 4. Classification 모델 학습하기

Logistic Regression 모델 생성/학습하기

from sklearn.linear_model import LogisticRegression# LogisticRegression 모델 생성/학습

model_lr = LogisticRegression(max_iter=10000)

model_lr.fit(X_train, y_train)

모델 학습 결과 평가하기

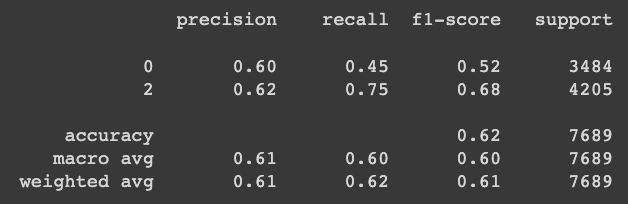

from sklearn.metrics import classification_report# Predict를 수행하고 classification_report() 결과 출력하기

pred = model_lr.predict(X_test)

print(classification_report(y_test, pred))

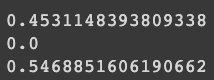

print(sum((y_test == 0)) / len(y_test))

print(sum((y_test == 1)) / len(y_test))

print(sum((y_test == 2)) / len(y_test))

Step5 모델 학습 결과 심화 분석하기

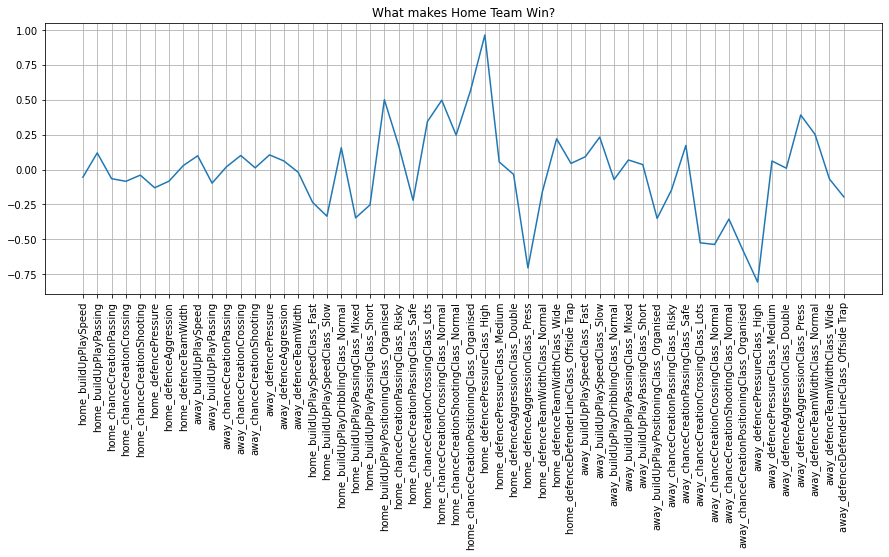

Logistic Regression 모델 계수로 상관성 파악하기

# Logistic Regression 모델의 coef_ 속성을 plot하기

fig = plt.figure(figsize=(15, 5))

plt.plot(X.columns, model_lr.coef_[0])

plt.xticks(rotation=90)

plt.title('What makes Home Team Win?')

plt.grid()

plt.show()

Step6 모델 성능 개선하기

매치 데이터프레임에 선수 특성 데이터프레임 통합하기

# 선수 특성 중 유의미한 정보를 매치 데이터프레임에 통합하기

df = df_match[['home_team_goal', 'away_team_goal']].copy()for team in ['home_', 'away_']:

team_map.index.name = team + 'team_api_id'

for col in team_map.columns:

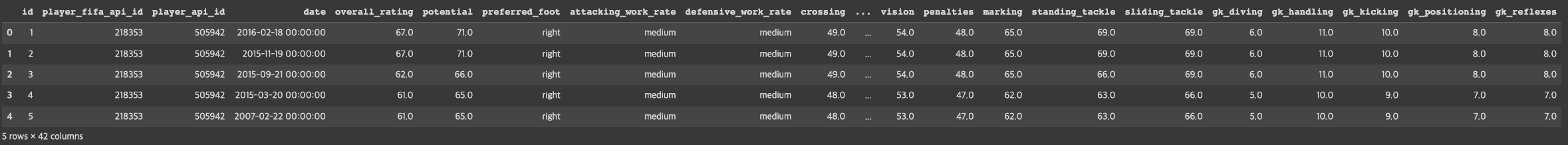

df[team + col] = df_match[team_map.index.name].map(team_map[col])df_player_att.head()

player_map = df_player_att.groupby('player_api_id').mean()['overall_rating']

player_map

for col in (s + str(idx) for s in ['home_player_', 'away_player_'] for idx in range(1, 12)):

df[col + '_rating']= df_match[col].map(player_map)df.dropna(inplace=True)df.head()

# train_test_split() 함수로 학습 데이터와 테스트 데이터 분리하기

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=1)모델 재학습하여 평가하기

# LogisticRegression 모델 생성/학습

model_lr = LogisticRegression(max_iter=10000)

model_lr.fit(X_train, y_train)

# Predict를 수행하고 classification_report() 결과 출력하기

pred = model_lr.predict(X_test)

print(classification_report(y_test, pred))

# Logistic Regression 모델의 coef_ 속성을 plot하기

fig = plt.figure(figsize=(15, 5))

plt.plot(X.columns, model_lr.coef_[0])

plt.xticks(rotation=90)

plt.title('What makes Home Team Win?')

plt.grid()

plt.show()